Nowadays we have noticed some of our customers are following the trend of open source products. Indeed, Linux is a great choice of operating system due to the fact it is totally compatible with SAP BusinessObjects BI 4 and it also help companies to cut costs. However, Linux has retained the way the classical Unix operating system works and therefore everything is about rights and batch commands. Therefore an advanced Linux technical know-how is compulsory before getting into it.

The purpose of this blog entry is to share the issues we faced in one of our customers running SAP BusinessObjects BI4 SP4 in a Red Hat Enterprise Linux Server release 6.3 using MySQL 5.1.61 as the system database and how we solved them.

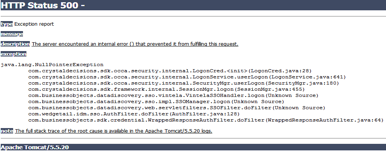

The issue came out when right after a production database migration (a brand new SQL Server 2008) all their WebI documents stopped running from the SAP BI4 Launchpad with an unusual error "Database Error .[ (IES 10901)" blocking every single WebI to run and the whole core business was jeopardized. Rich Client did not experience any problem in Windows. After the first analysis, we discovered that default SQL Server ODBC driver installation was only configured properly for 32bit connections in the Linux server whereas WebI requires 64bit ODBC driver connectivity for running in the SAP BI4 Launchpad.

When it came to this point we had to apply a couple of OSS notes. The first one was OSS 1607125 "How to configure SQL Server connectivity for WebI from a BI4.0 unix environment". Resolution is:

1. Open env.sh under <install directory>/sap_bobj/setup/

2. Search for the following line

LIBRARYPATH="$LIBDIR:$LIBDIR32:$WCSCOMPONENTDIR:$PLUGINDIST/auth/secEnterprise:${CRPEPATH64}:${CRPEPATH}:${MWHOME}:$PLUGINDIST/desktop/CrystalEnterprise.Report:${BOBJEDIR}enterprise_xi40/$SOFTWAREPATH32/ras:${BOBJEDIR}mysql/lib”

3. Modify the line above by adding the following

":${BOBJEDIR}enterprise_xi40/linux_x64/odbc/lib:${BOBJEDIR}enterprise_xi40/$SOFTWAREPATH32/odbc/lib"

The line should look like this

LIBRARYPATH="$LIBDIR:$LIBDIR32:$WCSCOMPONENTDIR:$PLUGINDIST/auth/secEnterprise:${CRPEPATH64}:${CRPEPATH}:${MWHOME}:$PLUGINDIST/desktop/CrystalEnterprise.Report:${BOBJEDIR}enterprise_xi40/$SOFTWAREPATH32/ras:${BOBJEDIR}mysql/lib:${BOBJEDIR}enterprise_xi40/linux_x64/odbc/lib:${BOBJEDIR}enterprise_xi40/$SOFTWAREPATH32/odbc/lib”

4. Navigate to <install directory>sap_bobjenterprise_Xi40

5. Open odbc.ini file using vi or other text editor tools.

6. Find the entry for Sql Server DSN. The default DSN entry in the odbc.ini is called "[SQL Server Native Wire Protocol]" but it's recommended that you create your own DSN entry using the same parameters specified in the default DSN.

7. Update the "Driver" section of the DSN to point to 64 bit version of SQL Server ODBC drivers

Driver=<install directory>/sap_bobj/enterprise_xi40/linux_x64/odbc/lib/CRsqls24.so

8. Restart the SIA

However the issue was not resolved completely. We received a new error with the following description whenever we tried to run a WebI "Receive the error : Database error: [DataDirect][ODBC lib] System information file not found. Please check the ODBCINI environment variable.. (IES 10901) (WIS 10901)". This is a configuration issue on the Linux operating system with the environment variable ODBCINI. Please make sure your environment variables are set correctly according to OSS note 1291142 - "Web Intelligence reporting using DataDirect drivers in Unix" (as of today it still applies to BI4). Resolution is:

1. In the Bobje user's Unix profile, add/modify the following environment variables and source the profile

BOBJEDIR=<install_path>/bobje

export BOBJEDIR

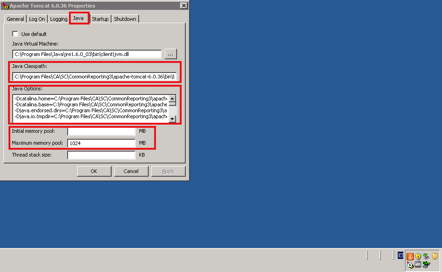

ODBC_HOME=$BOBJEDIR/enterprise120/<platform>/odbc

export ODBC_HOME

ODBCINI=$BOBJEDIR/odbc.ini

export ODBCINI

LD_LIBRARY_PATH=$BOBJEDIR/enterprise120/<platform>/dataAccess/RDBMS/connectionServer:$ ODBC_HOME/lib:$BOBJEDIR/enterprise120/<platform>/:$LD_LIBRARY_PATH

export LD_LIBRARY_PATH

NOTE: For AIX replace LD_LIBRARY_PATH with LIBPATH, For HP-UX use SHLIB_PATH

NOTE: Replace <platform> with linux_x86, solaris_sparc, aix_rs6000, hpux_pa-risc depending on your specific Linux platform.

NOTE: You must set/export the above env variables in the same order as shown.

Please make sure to use the file $HOME/.odbc.ini as your default source for ODBC settings. Therefore, modify the ODBCINI variable in the following way:

ODBCINI=$HOME/.odbc.ini

export ODBCINI

2. Modify the odbc.ini to add the DSN

[TestDSN]

Driver=<install_path>/enterprise120/<platform>/odbc/lib/CRmsss23.so

Description=DataDirect 5.3 SQLServer Wire Protocol Driver

Address=<sql_server host or ip>, <port>

Database=<db_name>

QuotedId=Yes

AnsiNPW=No

NOTE: Your DSN name (TestDSN) must be the same DSN name you used when creating the ODBC connection in Windows

3. DataDirect provides both NON-OEM drivers and OEM drivers

The drivers provided by BI4 are OEM drivers. Basically the WebI is dependent on the ConnectionServer. By default the ConnectionServer is set to use NON-OEM drivers. Thus, we edited the connection server to allow the use of the OEM branded DD driver. The steps are:

- Make a backup copy of $BOBJEDIR/enterprise120/<platform>/dataAccess/RDBMS/connectionServer/odbc/odbc.sbo

- Open odbc.sbo with VI, search for DataDirect, there are 4 entries one for each MSSQL server we support.

- Change all 4 from No to Yes <Parameter Name="Use DataDirect OEM Driver" Platform="Unix">Yes</Parameter>

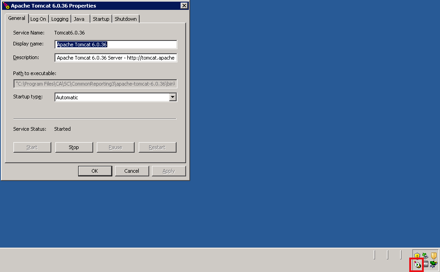

4. Stop all XI servers

Run ./stopservers, log out completely from your unix shell and log back in (to make sure new environment variables are setup), start all BI4 servers again.

After applying the OSS note we were able to retrieve data from SQL Server 2008 refreshing our WebI documents, however we noticed that CPU was reaching 100% every time we used a WebI in any way. Going through the log files we found errors such as "MS SQL Server 2008 |JobId:61340512 |EXIT SQLGetDiagRec with return code -1 (SQL_ERROR)" .

We took a look at the odbc.ini file and we found out that QWESD entry that was not initially there somehow appeared. As long as we were copying the information from an existing datasource we didn't need it all and we decided to remove the QEWSD=<random string> from the ini file.

Finally double check that <Parameter Name="Use DataDirect OEM Driver" Platform="Unix">Yes</Parameter> located at sqlsrv.sbo file in /opt/bi40/sap_bobj/enterprise_xi40/dataAccess/connectionServer/odbc is set to Yes.

We hope that our experience is a rapid problem solving approach for you. If you have any tips or suggestions to improve this article, please leave a comment below.