You have finished you standard implementation of SAP Rapid Marts XI, everything went fine but your customer start to have issues regarding the time consumption of the delta loads. In this article I will explain a couple of approaches to achieve a better performance on delta loads of SAP Rapid Marts. In the image below we have the typical infrastructure of SAP Rapid Marts, loading into on single data warehouse.

This infrastructure has pros and cons but I will highlight two main advantages:

- Avoids duplication of information

- Simplifies maintenance from customer perspective

1st Approach: One job runs it all

Taking the architecture illustrated above as our basis, the first step to achieve better performance will be to create one single ETL job to run the different SAP Rapid Marts involved in our implementation.

This task is simple; just create one workflow per SAP Rapid Mart containing all the different workflows that are part of each SAP Rapid Mart. Once this task is done, create an ETL job with all the corresponding global variables, drag and drop all the workflows and connect them to create a sequence of execution.

This job also allows us to take advantage of the “execute only once” option in SAP Data Services. This option is set for all the components in SAP Rapid Marts and it defines that each component within the same ETL job execution is executed only once. If you take into account how many components are shared between different SAP Rapid Marts this approach becomes very interesting.

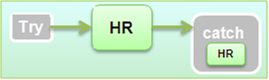

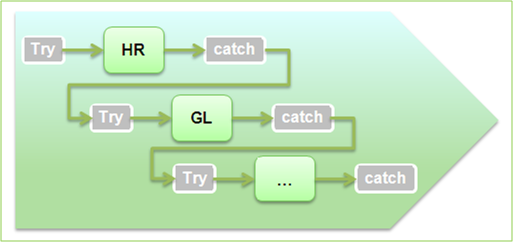

In addition, this approach allows us to create a strategy of try/catch in the ETL process. Some customer environments can have intermittent issues that can crash the execution of our daily loads (i.e. network errors). We will place try and catch statements for every workflow of the job, then inside the catch statement we will place again the workflow that we were trying to execute, the following image illustrates the idea:

The try/catch + ”Execute only once” strategy allows you to retry the execution of a component of the ETL job and continue the execution where it stopped.

Once this idea is implemented the execution of your SAP Rapid Marts will be more robust and optimized but maybe not enough to fulfill your customer´s expectations… so let us move on to the second step.

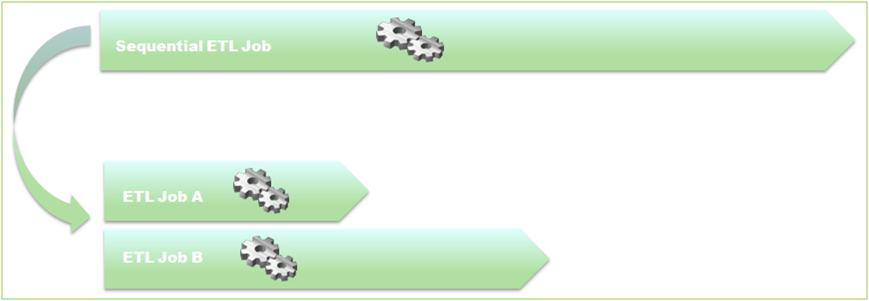

2nd Approach: Working around a parallel execution

Analyzing the information of the Performance Reports generated in SAP Data Services Management Console after the execution of a job, you will be able to identify the components with the worst execution times.

These components can vary from one implementation to another depending of your customer´s environment; within the top 10 worst execution times you will find some components generating information of dimensions and/or fact tables of the model. Some of these components can be easily removed from your sequential execution and placed on a separated job to be executed in parallel.

It is critical at this stage to ensure that these components are completely removed from the sequential execution and that any final output of the component is not used in other parts of the ETL process (i.e. subsequent table lookups). To ensure this, the function “Where is used” of the SAP Data Services Designer will be extremely helpful.

In my experience, after applying these two steps we should experience a considerable improvement on execution performance of delta loads. To give you an example, in one of our recent implementations we started with an execution time of 17 hours for five SAP Rapid Marts running sequentially, this was decreased to 6 hours using the two approaches I have described in this post.

Digging deeper

If even after applying the previous steps you still face bad performance in isolated components, this situation will require more analysis and customization at lower level.

Some components of the standard SAP Rapid Mart tries to execute on the ERP side some components with complex logic, which can take a very long time (i.e. SAP General Ledger RM + SAP Note 1557975 or SAP Inventory RM + SAP Note 1528553)

In these cases, the workaround is to split the process in several steps and maybe make use of custom tables on the ERP side and performance boost will be remarkable. I can tell you that in our most recent implementation one of the components was taking no less than 12 hours to run but after we analyzed and modified the behavior of the component, to make use of one custom table on the ERP, this component took no more than 30 minutes to run. This process of customization of a component took 2 man days to be completely implemented.

As conclusion, my experience with the SAP Rapid Marts is very positive. SAP provides a rapid deployment solution that can be up and running end-to-end in a few weeks. Furthermore, it provides an extremely easy to use framework to ensure your customer has the ability to develop any level of customization in a few weeks. Overall we are in front of a solution that will allow your customers to create their own data warehouse in weeks instead of months. If we can improve this issue of delta load performance, the solution becomes even more appealing to your customers and it helps to increase satisfaction levels with the tool.

That´s all folks! I hope this article will help you to raise the bar in your SAP Rapid Marts implementations. If you have any doubts feel free to leave a comment below.