Let's assume that you've been convinced by Marc's excellent article about the aggregate awareness dilemma, and that after balancing all the arguments you've decided to implement the aggregates in your Oracle database.

Two parts are necessary: the materialized views and the query rewrite mechanism.

What is a materialized view?

Think of it as a standard view: it's also based on a SELECT query. But while views are purely logical structures, materialized views are physically created, like tables. And like tables, you can create indexes on them. But the materialized views can be refreshed (automatically or manually, we'll see that later) against their definitions.

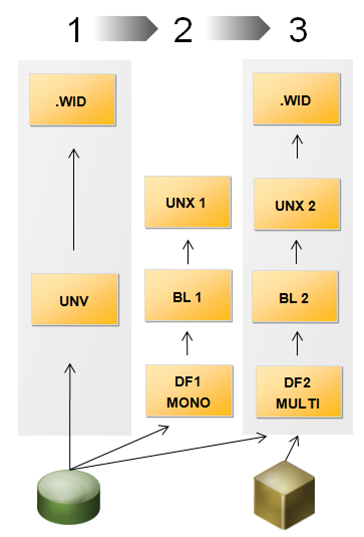

Let's imagine the following situation: a multinational company manages the financial accounts of its subsidiaries. For each period (year + month) and for each company, many thousands of records are saved in the data warehouse (with an account code and a MTD (month to date) value). You'll find below a very simplified schema of this data warehouse.

What happens when we want to have the sum of all accounts for each period?

Without a materialized view, all the rows have to be retrieved so that the sum can be calculated. In my case, the following query takes around 2 seconds on my test database. The explanation plan tells me that more than 1 million records had to be read in the first place.

(Query 1)

select p.year, p.month, sum(a.mtd)

from dim_period p

join account_balance a on a.period_key = p.period_key

group by p.year, p.month

So how do you avoid this reading of more than 1 million records? A solution is to maintain aggregate tables in your database. But it means a bigger ETL and a more complex Universe with @aggregate_aware functions. Although this could be a valid option, we've chosen to avoid that..

Another solution is to create a materialized view. The syntax can be quite simple:

(Query MV-1)

CREATE MATERIALIZED VIEW MV_PERIODS

BUILD IMMEDIATE

ENABLE QUERY REWRITE

AS

select p.year, p.month, sum(a.mtd)

from dim_period p

join account_balance a on a.period_key = p.period_key

group by p.year, p.month

Let's go through the query lines.

- CREATE MATERIALIZED VIEW MV_PERIODS => We simply create the view and give it the name MV_PERIODS.

- BUILD IMMEDIATE => The materialized view will be built now

- ENABLE QUERY REWRITE => If we don't specify this, then the materialized view will be created and could be accessed directly, but it wouldn't be automatically used by the query rewriting mechanism.

- The "as select…" is the same as the original query we made.

You'll notice when executing this query that the time needed to create this materialized view is at least the time needed to execute the sub-query (+ some time needed to physically write the rows in the database). In my case it was 2.5 seconds, slightly more than the original 2 seconds.

If now I re-execute my original query, I get the same result set as before, but instead of 2 seconds I now need 16 milliseconds. So it's now 120 times faster! Oracle understood it could automatically retrieve the results from the materialized view. So it only read this table instead of doing of full read of the fact table.

The data freshness

Now imagine a new month is gone, and new rows have arrived in your data warehouse. You re-execute your original select query and at your great surprise, it takes a lot of time: 2 seconds! But why?

It is possible to ask Oracle to tell us if a query was rewritten with a given materialized view, and if not to give us the reasons. Let's see a possible syntax below.

SET SERVEROUTPUT ON;

DECLARE

Rewrite_Array SYS.RewriteArrayType := SYS.RewriteArrayType();

querytxt VARCHAR2(4000) := '

select p.year, p.month, sum(a.mtd)

from dim_period p, account_balance a

where a.period_key = p.period_key

group by p.year, p.month

';

no_of_msgs NUMBER;

i NUMBER;

BEGIN

dbms_mview.Explain_Rewrite(querytxt, 'MV_PERIODS', Rewrite_Array);

no_of_msgs := rewrite_array.count;

FOR i IN 1..no_of_msgs

LOOP

DBMS_OUTPUT.PUT_LINE('>> MV_NAME : ' || Rewrite_Array(i).mv_name);

DBMS_OUTPUT.PUT_LINE('>> MESSAGE : ' || Rewrite_Array(i).message);

END LOOP;

END;

(The sections in red indicate which parts of the query you can update; the rest should stay as is).

Once I executed these lines, I got the following result:

>> MV_NAME : MV_PERIODS

>> MESSAGE : QSM-01150: query did not rewrite

>> MV_NAME : MV_PERIODS

>> MESSAGE : QSM-01029: materialized view, MV_PERIODS, is stale in ENFORCED integrity mode

(Technical note: to see these lines in the Oracle SQL Developer, you need to activate the DBMS output: menu View / DBMS Output and then click on the button 'Enable DMBS Output for the connection)

The line "materialized view, MV_PERIODS, is stale in ENFORCED integrity mode" means that the materialized view is not used because it does not have the right data anymore. So to be able to use the query rewrite process once again, we need to refresh the view with the following syntax:

BEGIN DBMS_SNAPSHOT.REFRESH('MV_PERIODS','C'); end;

Note that in certain situations, the final users may prefer having the data from yesterday in 1 second rather than the data of today in 5 minutes. In that case, choose the STALE_TOLERATED integrity mode (rather than the ENFORCED default) and the query will be rewritten even if the data in the materialized view is not fresh anymore.

Extend your materialized views

Now let's imagine that we want to have not only the account sums by periods, but also by company code. Our new SQL query is the following:

(Query 2)

select p.year, p.month, c.company_code, sum(a.mtd)

from dim_period p, account_balance a, dim_company c

where a.period_key = p.period_key

and a.company_key = c.company_key

group by p.year, p.month, c.company_code

Of course the materialized view MV_PERIODS doesn't have the necessary information (company key or company code) and cannot be used to rewrite this query. So let's create another materialized view.

(Query MV-3)

CREATE MATERIALIZED VIEW MV_PERIODS_COMPANIES

BUILD IMMEDIATE

ENABLE QUERY REWRITE

AS

select p.year, p.month, c.company_code, sum(a.mtd)

from dim_period p, account_balance a, dim_company c

where a.period_key = p.period_key

and a.company_key = c.company_key

group by p.year, p.month, c.company_code

So now our query takes a very short time to complete. But what if, after having deleted the MV_PERIODS materialized view, you try to execute the first query (the one without the companies)? The query rewrite mechanism will work as well! Oracle will understand that it can use the content of MV_PERIOD_COMPANIES to calculate the sums quicker.

Be aware that the query will only rewrite if you had created a foreign key relationship between ACCOUNT_BALANCE.COMPANY_KEY and DIM_COMPANY.COMPANY_KEY. Otherwise you'll get the following message:

QSM-01284: materialized view MV_PERIODS_COMPANIES has an anchor table DIM_COMPANY not found in query.

Is basing the materialized view on the keys an option?

The materialized views we've created are very interesting but still a bit static. You may ask yourself: wouldn't have it been a better idea to base the materialized view on the keys? For example with the following syntax:

(Query MV-4)

CREATE MATERIALIZED VIEW MV_PERIODS_COMPANIES_keys

BUILD IMMEDIATE

ENABLE QUERY REWRITE

AS

select period_key, company_key, sum(mtd)

from account_balance

group by period_key, company_key

The answer is "it depends". On the good side, this allows for a greater flexibility, as you're not limited to some fields only (as in the query MV-1 where you're limited to year and month). On the bad side, as you're not using any join, the joins will have to be made during the run-time, which has an impact on the performance query (but even then, the query time will be much better than without materialized views).

So if you want a flexible solution because you don't know yet which are the fields that the users will need, it's probably better to use the keys. But if you already know the precise queries which will come (for example for pre-defined reports), it may be worth using the needed fields in the definition of the materialized view rather than the keys.

If you have any doubts or further information on this topic, please leave a comment below.