In the last two months I have been in a couple of customers who were interested in the integration of SAP Strategy Management 10.0 with SAP BusinessObjects BI Platform 4.0. In this post I would like to explain what that integration is about.

First of all, a brief introduction about the solution. The SAP Strategy Management (SSM) software allows aligning the Strategy Plan of the company and its key objectives and spread it across all the organization. It is an out of the box Enterprise Performance Management solution in which you can insert the most important KPIs of your company in Balanced Scorecards or Strategy Maps and control their performance in relation to the Strategy of the company. Within SSM you can also create initiatives to implement improvements or corrective actions and link them to the objectives or KPIs.

Sometimes the customer has requirements to implement reports or further analysis on top of SSM and these cannot be covered with the standard functionality of the solution. In that case, the best option is to implement the integration between SSM and SAP BusinessObects reporting tools. Some examples of what you can do with that integration:

- Implement bespoke Dashboards with your SSM KPIs and Objectives

- Implement universes and allow users to exploit information from SSM with Web Intelligence without consuming additional SSM licenses

- Implement pixel-perfect Crystal Reports on top of the SSM

- Foster ownership with Publications. For example, send a list of KPI’s that are not performing well to their responsible users

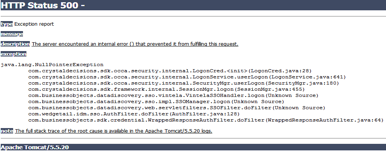

For implementing such integration, you can follow the SAP SSM Configuration guide. However, in some cases, there is lack of documentation and you have to do things outside the script. In other cases, the existing documentation has not been updated yet to BI 4.0 and SSM 10.0. And finally, sometimes the documentation is wrong or the software has bugs and you cannot setup the integration.

In this post we will analyze following scenarios:

- Web Intelligence reporting on top of a SSM Models

- Web Intelligence reporting on top of a SSM Data Model (Clariba-developed solution)

- Crystal Reports on top of SSM (exploring different options)

- Dashboards on top of SSM (exploring different options)

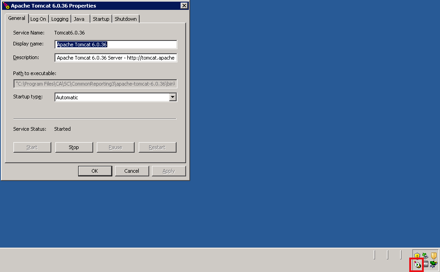

These scenarios have been implemented with following software components:

- SAP NetWeaver 7.3 SP08

- SAP SSM 10.0 SP06

- SAP BusinessObjects BI Platform 4.0 SP05

- Crystal Reports 2011 SP05

- Dashboards 4.0 SP05

1. Web Intelligence reporting on top of a SSM Model

As per SAP documentation, we can setup the ODBO Provider in order to build a Universe on top of SSM Models. The problem is we still have to use the Universe Designer instead of the Information Design Tool of the BI 4.0. Bellow are the steps for setting up the ODBO Provider and implementing your first report on top of the SSM:

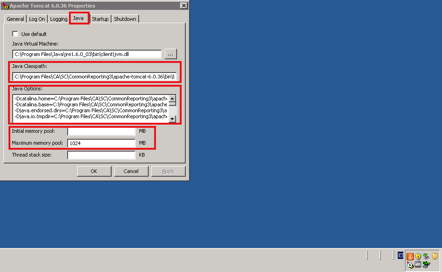

- Go to your BO 4.0 server and make sure you have a Multimensional Analysis Service in the BO server. Stop the MDAS Server and the Connection Server in the Central Configuration Manager

- Copy the ODBOProvider folder from the <drive>:Program files (x86)SAP BusinessObjectsStrategy ManagementInternetPub path of your SSM server to the BO server

- In the SSM server, run the SSMProviderReg.bat file in the BO server. Make sure you have administrator rights in the BO server. Once completed, check in the regedit that you are able to find the register SSMProvider.1 in the Windows register

- Modify the windows register to insert following string in that path (assuming that you are using a 64-bit Windows): HKEY_LOCAL_MACHINESOFTWAREWow6432NodeSAPSSMODBOProvider "servletUri"="/strategyServer/ODBOProviderServlet"

- Access the following path in BO server: <drive>:Program Files (x86)SAP BusinessObjectsSAP BusinessObjects Enterprise XI 4.0dataAccessconnectionServeroledb_olap

<DataBase Active="Yes" Name="Strategy Management 10.0">

<Aliases>

<Alias>Strategy Management 10.0</Alias>

</Aliases>

<Library>dbd_sqlsrvas</Library>

<Parameter Name="Family">SAP BusinessObjects</Parameter>

<Parameter Name="Extensions">sqlsrv_as2005,sqlsrv_as,oledb_olap</Parameter>

<Parameter Name="MSOlap CLSID">SSMProvider.1</Parameter>

</DataBase>

- Start the MDAS Server and the Connection Server

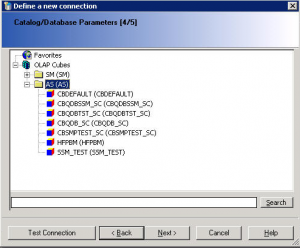

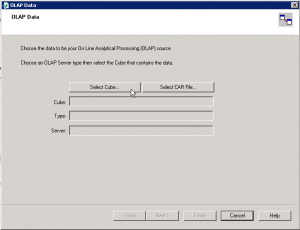

- Now we can go to the Universe Designer and start implementing a Universe on top of our Model. The first step is defining the connection. In the connection list (retrieved from the Connection Server) we can see now the new register we have inserted, Strategy Management 10.0:

- Define the connection parameters. You must be an SSM user with proper permissions and you must inform the server's complete address (FQDN) and its port.

- Once connected to the SSM server, you will see the list of available Cubes. The AS category allows access to the measures (based on attributes and dimensions) in the Application Server model.The SM Adapter allows access to the strategy dimension, which represents the strategy management dimensions Scorecard and Initiative. Scorecard detail not relating to the KPI such as comments are not presented.

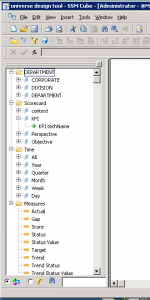

- And finally we will see our universe with the available dimensions, the standard classes (Time and Scorecards) and the measures. You can display the technical names of the objects as detail or you can define hierarchies of Perspectives, Objectives and KPIs.

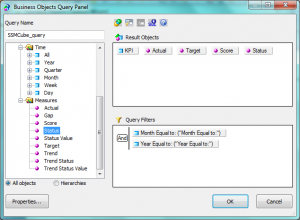

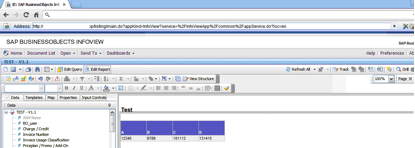

- Now we can publish the universe and go to the Web Intelligence to start implementing our reports on top of the SSM models.

2. Web Intelligence reporting on top of SSM Data Model

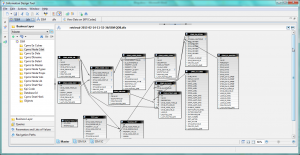

That is a solution you can implement if you have an advanced knowledge of the SSM Data Model. You can implement a UNX Universe with the Information Design Tool by linking all the tables of the Entry and Approval, the Nodes of the Scorecard, the Cube Builder or the Initiatives. The advantages of that option is that you have access to extra information not available in the SSM Cube, such as the Initiatives, the users related to specific KPIs, the attributes of the KPIs etc.

If you are interested in such an option, please, contact us. Keep in mind that it is not a supported option from SAP but we have implemented it many times and we know it works.

3. Crystal Reports on top of SSM

The integration of SAP Crystal Reports with SSM can be done by 4 different ways:

- Implementing a Query as a Web Service from the Universe, built in previous steps: we have managed to implement that scenario

- Connecting Crystal Reports to the Universe on top of the SSM Models we have built in the first step: that scenario is not working although we followed the instructions from SAP

- Using an OLE DB (ADO) connection: this option, not explained by SAP, is available if we install the ODBO provider but we have not managed to make it work

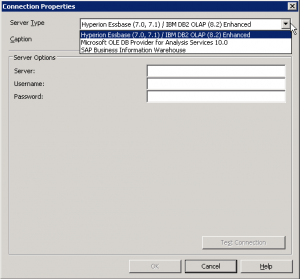

- Using the OLAP Connection: according to SAP Documentation, we can build an OLAP Cube Report in Crystal Reports. We should be able to select the Strategy Management option in following screen to inserts SSM connection data, but we have not been able to find that option

4. Dashboards using Web Services Connections

Using Dashboards, you have two different ways to implement the access to data:

- Implement a Query as a Web Service (QaaWS) to retrieve the relevant information from the Universes we had implemented before.

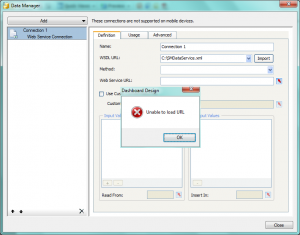

- Use Web Services available in the SAP NetWeaver to retrieve information from the SSM: according SAP documentation you need to download the WSDL file SMDataServiceService and CubeServiceService applications and call the functions within them. With our Dashboards 4.0 SP05 we have not been able to process the WSDL files as the tool is unable to load the URL.

Summary

Providing SAP Strategy Management information to SAP BusinessObjects BI Platform 4.0 can enhance the capabilities of your Strategy system. However that integration is not so easy given the lack of information on that topic and the quality of the existing information. We tried to implement all possible integration scenarios and we have succeeded with Web Intelligence and this is the route we recommend as the scenarios related to Crystal Reports and Dashboards the integration were not working when using SSM 10.0 and BI 4.0.

We will be following-up these issues and let you know if we finally manage to solve them. If you have any suggestions or if you found a workaround to these issues please leave a comment bellow.